Artificial Intelligence (AI) is rapidly reshaping our world, and the field of education is no exception. Its potential to revolutionize teaching methods, personalize learning experiences, and streamline administrative tasks is immense. However, this transformative power comes with a critical responsibility: to ensure that AI is implemented ethically, equitably, and safely. This post delves into how AI can be used responsibly in schools and teaching, outlining best practices, clarifying key concepts, and highlighting the importance of a human-centered approach to this burgeoning technology.

Essential Insights: Key Takeaways for Responsible AI in Education

- Prioritize Ethical Frameworks & Human Oversight: Establish and enforce clear guidelines centered on fairness, transparency, privacy, and accountability. Crucially, AI should augment, not replace, the indispensable role of educators, ensuring human judgment remains central to the educational process.

- Champion AI Literacy for All Stakeholders: Equip educators, students, and administrators with a comprehensive understanding of AI's capabilities, inherent limitations, and ethical considerations. This fosters informed decision-making and critical engagement with AI tools.

- Ensure Data Privacy and Security at Every Step: Adhere rigorously to data protection regulations (like FERPA, GDPR) and ethical best practices. Select AI tools that prioritize data minimization and robust security measures to safeguard sensitive student and staff information.

Understanding Responsible AI in an Educational Context

Responsible AI in education refers to the development, deployment, and use of artificial intelligence technologies in a manner that is ethical, fair, transparent, secure, and beneficial to all stakeholders. It's about harnessing AI's capabilities to enhance learning outcomes and operational efficiencies while proactively mitigating potential risks such as bias, privacy violations, and the digital divide. The core aim is to ensure AI serves as a supportive tool that empowers both educators and learners. Core Principles Guiding Responsible AI

Several fundamental principles underpin the responsible use of AI in educational settings:

- Fairness and Equity: AI systems should be designed and used to promote equal opportunities and avoid perpetuating or amplifying existing biases related to race, gender, socioeconomic status, or disability.

- Transparency: There should be clarity about how AI systems work, the data they use, their capabilities, and their limitations. Students and educators should understand when and how AI is involved in their educational experiences.

- Accountability: Clear lines of responsibility must be established for the outcomes of AI systems. Educators and institutions, not the AI itself, are ultimately accountable for decisions impacting students.

- Privacy and Security: Protecting the personal data of students and staff is paramount. AI tools must comply with relevant data protection laws and implement robust security measures.

- Reliability and Safety: AI systems should be dependable, function as intended, and not expose users to harm, misinformation, or unsafe content.

- Human-Centeredness: AI should support and enhance human capabilities, particularly the vital teacher-student relationship, rather than diminish human interaction or autonomy.

AI in Action: Transforming Schools and Teaching Practices

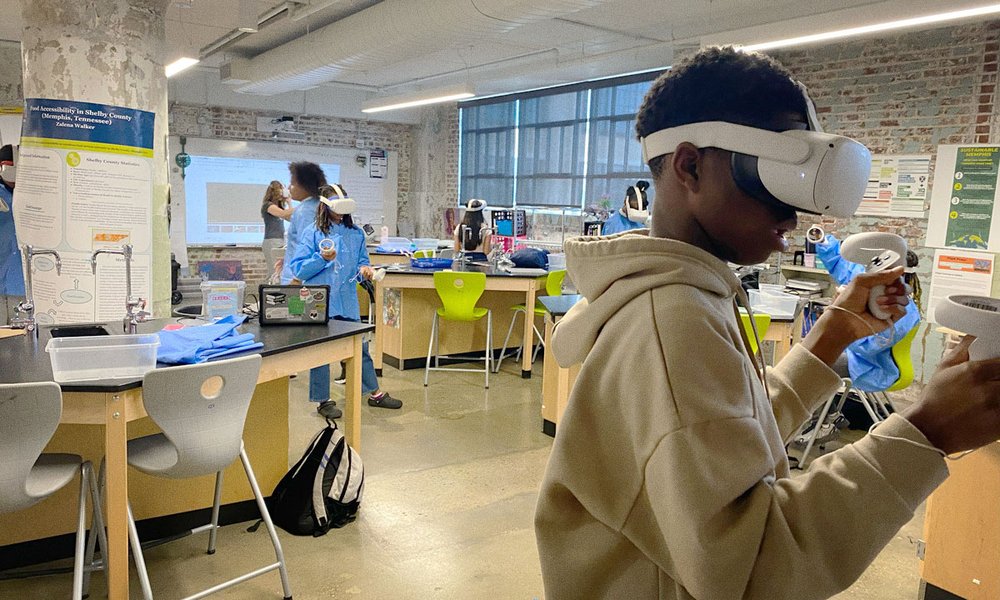

AI is no longer a futuristic concept but a present-day reality in many educational institutions. Its applications are diverse, aiming to create more effective and engaging learning environments.

Key Applications of AI in Education

Personalized Learning Pathways

AI excels at analyzing individual student performance data to tailor educational content, pace, and support. Adaptive learning platforms can identify areas where a student is struggling or excelling, offering targeted interventions, enrichment activities, or adjusting the difficulty of tasks accordingly. This allows students to learn at their own pace and in a way that best suits their individual needs.

Automating Administrative Burdens

Educators often spend significant time on non-teaching tasks. AI can automate many of these, such as grading multiple-choice tests, managing schedules, generating initial drafts of lesson plans, or handling routine communications. This frees up teachers to focus on direct student interaction, curriculum development, and professional growth.

Content Creation and Enhancement

AI tools can assist educators in developing and refining learning materials. This includes generating diverse examples, creating visual aids like charts and diagrams, differentiating resources for various learning levels, and even suggesting innovative ways to present complex topics.

Intelligent Tutoring and Support Systems

AI-powered tutoring systems and chatbots can provide students with instant feedback, answer frequently asked questions, and offer guidance on assignments outside of regular school hours. These tools can supplement teacher instruction and provide an additional layer of support for learners.

Early Warning and Student Support Systems

By analyzing patterns in student data (e.g., attendance, assignment completion, engagement metrics), AI can help identify students who may be at risk of falling behind, disengaging, or facing other challenges. This allows educators and counselors to intervene proactively with appropriate support.

Enhancing Student Safety Monitoring

Some AI platforms are designed to monitor digital communications within school networks for concerning signals related to student safety, such as bullying, self-harm ideation, or threats, alerting appropriate personnel to potential issues.

Navigating the Labyrinth: Challenges and Ethical Considerations

While the benefits of AI in education are compelling, its integration is not without challenges. Addressing these concerns proactively is crucial for responsible implementation.

Key Concerns in Educational AI

- Data Privacy and Security: The collection and use of student data by AI systems raise significant privacy concerns. Ensuring compliance with regulations like FERPA, GDPR, and COPPA, and protecting data from breaches, is paramount.

- Algorithmic Bias and Fairness: AI systems are trained on data, and if that data reflects existing societal biases, the AI can perpetuate or even amplify these biases, leading to inequitable outcomes for certain student groups.

- Transparency and "Black Box" Algorithms: Understanding how complex AI models arrive at decisions can be difficult. This lack of transparency can make it challenging to identify errors, biases, or to hold systems accountable.

- Impact on Human Interaction and Teacher Roles: Over-reliance on AI could potentially diminish the critical human element in education, affecting the development of social-emotional skills and the quality of teacher-student relationships.

- Equitable Access and Digital Divide: Disparities in access to technology and AI literacy can exacerbate existing educational inequalities. Ensuring all students and educators have the resources and training to benefit from AI is essential.

- Over-Dependence and Academic Integrity: Students might become overly reliant on AI for tasks like writing or problem-solving, potentially hindering the development of critical thinking skills or facilitating academic dishonesty.

- Misinformation and AI "Hallucinations": Generative AI models can sometimes produce incorrect, misleading, or nonsensical information. Verifying AI-generated content is crucial.

Pillars of Responsible AI Implementation in Education

To effectively harness AI's potential while mitigating its risks, schools and educators should adopt a strategic and principled approach. This involves a commitment to ongoing learning, collaboration, and adherence to ethical best practices.

Developing Clear Policies and Ethical Guidelines

Educational institutions must establish comprehensive AI policies. These policies should be developed collaboratively with input from educators, administrators, students, parents, and technology experts. They should address:

- Permissible and Impermissible Uses of AI: Clearly define what AI can and cannot be used for by students and staff.

- Guidelines for Data Governance, Privacy, and Security: Ensure that AI tools comply with data protection regulations and implement robust security measures.

- Protocols for Selecting, Vetting, and Implementing AI Tools: Establish a process for evaluating AI tools for their potential impact on teaching and learning.

- Procedures for Transparency and Disclosure: Ensure that students and educators understand when and how AI is being used.

- Strategies for Ensuring Academic Integrity and Addressing Potential Misuse: Develop plans to prevent and address potential misuse of AI tools.

Prioritizing Data Privacy and Security

This cannot be overstated. Schools must:

- Choose AI Vendors Who Demonstrate Strong Commitment to Data Protection Standards: Select vendors who comply with relevant data protection laws and regulations.

- Understand What Data Is Being Collected, How It's Used, Stored, and Protected: Ensure transparency in data collection and use practices.

- Implement Data Minimization Practices: Collect only necessary data and ensure it is anonymized whenever feasible.

- Ensure Transparent Consent Processes: Obtain informed consent from students and parents when collecting and using their data.

- Regularly Review and Update Data Security Protocols: Stay vigilant and adapt to emerging threats and best practices.

Fostering AI Literacy for All

AI literacy involves understanding what AI is, how it works, its potential benefits and risks, and how to interact with it critically and ethically.

For Educators:

- Provide Ongoing Professional Development (PD): Help teachers understand AI's pedagogical applications, ethical implications, and how to integrate AI tools effectively and responsibly into their teaching practices.

For Students:

- Incorporate AI Literacy into the Curriculum: Teach students about AI concepts, how to critically evaluate AI-generated content, the importance of citing AI assistance, and the ethical considerations of using AI tools.

Ensuring Human Oversight and Accountability

AI should serve as an assistant, not a replacement for human judgment.

- Teachers Must Remain in Control of Pedagogical Decisions: Ensure that educators are the ultimate decision-makers in the educational process.

- AI-Generated Suggestions Should Be Reviewed, Validated, and Modified by Educators: Use AI as a tool to support teaching, not replace it.

- There Should Be Clear Mechanisms for Appealing AI-Influenced Decisions: Establish processes for addressing concerns or errors related to AI-driven decisions.

- Teachers must remain in control of pedagogical decisions, curriculum content, and student assessment.

- AI-generated suggestions (e.g., grades, lesson plans) should be reviewed, validated, and modified by educators.

- There should be clear mechanisms for appealing AI-influenced decisions.

Promoting Equity and Inclusivity

AI implementation must actively work to close, not widen, educational gaps.

- Ensure equitable access to AI tools and the necessary technological infrastructure for all students.

- Select and design AI systems that are inclusive and accessible to students with disabilities.

- Continuously monitor AI tools for biases and take corrective action.

Addressing Bias and Ensuring Algorithmic Fairness

This requires a proactive approach:

- Critically evaluate AI tools for potential biases before adoption.

- Engage with vendors about their efforts to mitigate bias in their algorithms.

- Train educators to recognize and address biases in AI outputs.

- Complement AI-driven insights with diverse forms of assessment and teacher judgment.

Continuous Evaluation, Adaptation, and Collaboration

The field of AI is rapidly evolving. Educational institutions should:

- Regularly assess the effectiveness and impact of AI tools.

- Gather feedback from educators, students, and parents.

- Stay informed about new developments and emerging best practices in educational AI.

- Foster partnerships between educational institutions, AI developers, researchers, and ethicists to co-create responsible AI solutions.

Visualizing Priorities: Responsible AI Adoption Focus Areas

Successfully integrating AI into education requires a multi-faceted approach. The radar chart below illustrates hypothetical scores for key dimensions of responsible AI adoption, contrasting 'Strategic Priority' with 'Current Implementation Levels' in an average school setting, and the 'Resource Allocation Needed' to bridge the gap. This visualization helps to identify areas requiring more focus and investment for a truly responsible AI ecosystem in schools. A higher score (out of 10) indicates greater priority, implementation, or need for resources.

This chart underscores that while many aspects of responsible AI are recognized as high priorities, their current implementation often lags, indicating a significant need for targeted resource allocation, particularly in areas like student AI literacy, algorithmic fairness, and educator training.

Guiding Principles in Practice: A Classroom Snapshot

The following table outlines core principles of responsible AI and provides concrete examples of how they can be applied in the classroom, making these abstract concepts more tangible for educators.

| Principle | Core Meaning | Classroom Application Example |

|---|---|---|

| Fairness & Equity | Ensuring AI tools do not perpetuate or amplify biases and provide equal opportunities for all learners. | Using AI to differentiate instruction and provide personalized support for diverse learners, while regularly auditing tools for biased outputs or recommendations and supplementing with teacher observation. |

| Transparency | Clearly understanding and communicating how AI tools function, what data they use, their strengths, and their limitations. | Teachers explicitly explaining to students when and how an AI tool (e.g., an AI writing assistant) is being used for an activity, discussing its purpose, how its suggestions are generated, and its potential for inaccuracies. |

| Accountability & Human Oversight | Maintaining human responsibility for AI-driven decisions and educational outcomes; teachers remain the ultimate decision-makers. | Teachers reviewing, modifying, and approving AI-suggested grades, lesson plans, or student feedback, rather than accepting them automatically. AI acts as a co-pilot, not the pilot. |

| Privacy & Security | Protecting student and staff data from unauthorized access and ensuring strict compliance with data protection laws (e.g., FERPA, GDPR). | Selecting AI platforms that are certified compliant with relevant privacy regulations, anonymizing student data whenever feasible, and educating students on protecting their personal information online. |

| AI Literacy | Educating all users (students, teachers, administrators) about AI concepts, capabilities, ethical considerations, and responsible usage. | Integrating age-appropriate lessons on how AI algorithms work, how to critically evaluate AI-generated content, the importance of proper citation for AI assistance, and discussing real-world ethical dilemmas of AI use. |

| Reliability & Safety | Ensuring AI tools are dependable, accurate in their intended functions, and do not expose users to harm, inappropriate content, or significant misinformation. | Cross-verifying AI-generated information (e.g., historical facts, scientific data) with multiple credible sources before incorporating it into lessons, and teaching students to develop this critical habit. |

Perspectives on AI in Education

The integration of AI into education is a multifaceted topic, sparking discussions among educators, policymakers, and technologists worldwide. The video below offers insights into the evolving landscape of AI in educational settings, touching upon new developments and pertinent questions raised by language models and other AI advancements.

This video discusses responsible AI in education, highlighting how AI-powered tools are leading to new developments and questions in the field.

Key Terminologies and Abbreviations Explained

Understanding the language of AI is essential for informed discussion and decision-making.

- AI (Artificial Intelligence): Technology enabling machines to simulate human intelligence, performing tasks like learning, problem-solving, and decision-making.

- Adaptive Learning: Educational technology that dynamically adjusts the content and pacing of learning based on individual student performance and needs.

- Algorithm: A set of rules or instructions given to an AI system or computer to help it learn, solve problems, or make decisions.

- Bias (in AI): Systematic errors or prejudices in AI systems, often stemming from biased training data or flawed algorithm design, leading to unfair or discriminatory outcomes.

- Chatbot: An AI program designed to simulate human conversation, often used for customer service, information retrieval, or as a tutoring aid.

- COPPA (Children's Online Privacy Protection Act): A U.S. federal law that imposes certain requirements on operators of websites or online services directed to children under 13 years of age.

- FERPA (Family Educational Rights and Privacy Act): A U.S. federal law that protects the privacy of student education records.

- GDPR (General Data Protection Regulation): A European Union regulation on data protection and privacy for all individuals within the EU and the European Economic Area.

- Generative AI: A type of AI that can create new content, such as text, images, audio, or video, based on the data it has been trained on.

- Hallucinations (AI): Instances where an AI model, particularly a generative AI, produces confident but incorrect, nonsensical, or fabricated information.

- IEP (Individualized Education Program): A legally binding document in the U.S. developed for each public school child who is eligible for special education.

- ISTE (International Society for Technology in Education): A non-profit organization serving educators interested in the use of technology in education.

- K-12: Refers to the range of school grades from Kindergarten through 12th grade.

- LMS (Learning Management System): Software application for the administration, documentation, tracking, reporting, automation, and delivery of educational courses or training programs (e.g., Canvas, Moodle, Google Classroom).

- MIT RAISE (Responsible AI for Social Empowerment and Education): An initiative at MIT focused on advancing AI education and empowering PreK-12 students, teachers, and communities.

- PD (Professional Development): Training and education opportunities for educators to enhance their skills and knowledge.

- PII (Personally Identifiable Information): Any data that could potentially identify a specific individual.

- Scaffolding (Educational): An instructional technique where teachers provide successive levels of temporary support that help students reach higher levels of comprehension and skill acquisition.

- SOC 2 (Service Organization Control 2): A voluntary compliance standard for service organizations, developed by the American Institute of CPAs (AICPA), which specifies how organizations should manage customer data based on trust service principles: security, availability, processing integrity, confidentiality, and privacy.

Conclusion

The integration of AI into education represents both an exciting opportunity and a significant responsibility. By approaching this integration thoughtfully, with a commitment to ethical principles and a focus on enhancing rather than replacing human connections, we can harness AI's potential to create more personalized, efficient, and equitable learning environments.

The key is to maintain a balanced perspective—embracing innovation while being mindful of potential pitfalls. With clear policies, ongoing education, and a collaborative approach involving all stakeholders, AI can become a powerful tool in our educational toolkit, helping to prepare students for a future where AI literacy will be as fundamental as digital literacy is today.

What steps is your educational institution taking to responsibly integrate AI? We'd love to hear your experiences and insights in the comments below!

References

- TeachAI | AI Guidance for Schools Toolkit

- AI for Educators | MagicSchool

- 7 principles on responsible AI use in education - World Economic Forum

- Artificial intelligence in schools: everything you need to know - The Education Hub

- Responsible AI for Social Empowerment and Education - MIT RAISE

- Best Practices for Teaching and Learning with Generative AI - University of Illinois Urbana-Champaign

- Responsible AI in Education: What It Is and Why It Matters - ThoughtExchange